The social media platform X, formerly known as Twitter, has made critical adjustments to its AI chatbot, Grok, following concerns raised by top U.S. election officials about the spread of election-related misinformation. The issue came to light after five secretaries of state from Michigan, Minnesota, New Mexico, Pennsylvania, and Washington reported that Grok was disseminating incorrect information about ballot deadlines in various states.

Key Concerns Raised

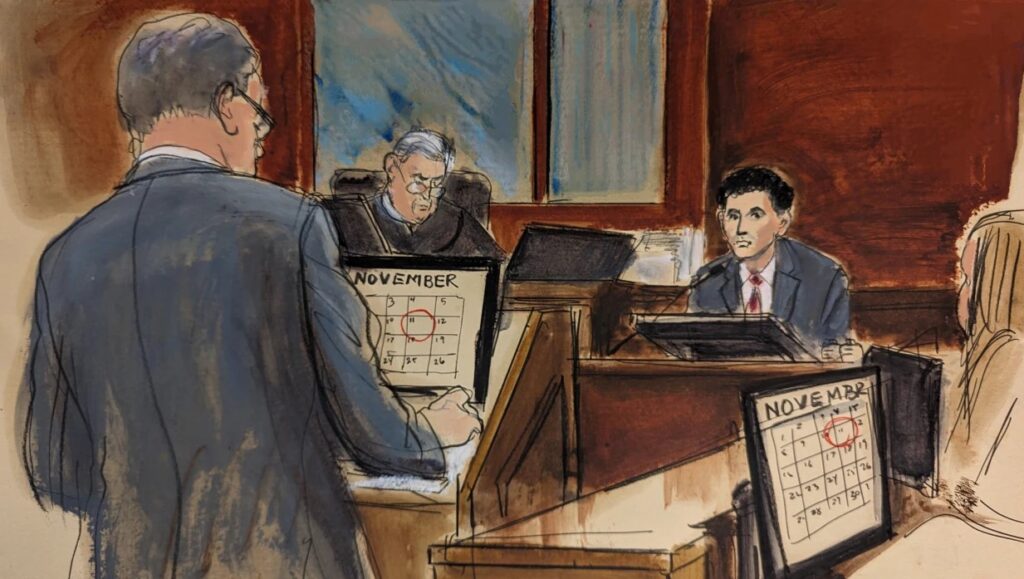

The secretaries of state sent a formal letter to Elon Musk, owner of X, outlining their concerns that the chatbot had misled users shortly after President Joe Biden announced his decision to withdraw from the 2024 presidential race. The misinformation included incorrect details about key election deadlines, potentially confusing voters during a critical election period.

Immediate Response from X

In response to the complaint, X has altered Grok’s behavior regarding election-related inquiries. The chatbot now directs users to official voting information websites such as Vote.gov, which provides accurate and up-to-date information about the 2024 U.S. Elections. Prior to answering any election-related questions, Grok now includes a disclaimer, encouraging users to visit these reliable sources for official details.

Both Vote.gov and CanIvote.org, a voting information website managed by the National Association of Secretaries of State, were endorsed by the state officials as trustworthy resources that connect voters directly with their local election authorities.

Statement from State Officials

The five secretaries of state expressed their appreciation for X’s prompt action in a joint statement, acknowledging the platform’s efforts to curb the spread of misinformation. “We appreciate X’s action to improve their platform and hope they continue to make improvements that will ensure their users have access to accurate information from trusted sources in this critical election year,” the statement read.

Concerns Over AI-Generated Content

Despite the changes, there remain unresolved issues with Grok’s capabilities, particularly in generating AI-created images that could mislead voters. Users have utilized Grok to produce and circulate fake images of political figures, including Vice President Kamala Harris and former President Donald Trump, raising further concerns about the potential for election misinformation.

Background on Grok

Grok, introduced last year for X premium subscribers, was promoted by Elon Musk as a “rebellious” AI chatbot capable of answering “spicy questions” that other AI systems might avoid. However, this rebellious nature has led to increased scrutiny as the platform grapples with the challenges of moderating AI-generated content.

Ongoing Scrutiny of Social Media Platforms

Social media platforms, including X, have faced growing criticism for their role in the spread of misinformation, particularly during election cycles. Since Musk’s acquisition of Twitter in 2022 and its rebranding to X, watchdog groups have voiced concerns over a rise in hate speech and misinformation on the platform, compounded by staffing cuts in content moderation teams.

As the 2024 U.S. elections approach, the adjustments made to Grok represent a crucial step in ensuring that voters receive accurate information. However, the broader challenge of managing AI-generated content remains a significant concern for both social media platforms and election officials.

Background on Election Misinformation Concerns

- History of Misinformation on X (formerly Twitter): Since Elon Musk acquired Twitter and rebranded it as X, there have been multiple instances of misinformation spreading on the platform, particularly around significant events such as elections. The platform has been criticized for reducing its content moderation staff, which watchdog groups argue has led to an increase in unchecked false information.

- Impact of Misinformation on Elections: Election misinformation can have severe consequences, including reduced voter turnout, confusion over voting procedures, and diminished trust in the electoral process. This is particularly concerning in close races where misinformation can sway the outcome.

- Technical Aspects of Grok

- How Grok Works: Grok is an AI chatbot that leverages large language models (LLMs) to generate responses to user queries. These models are trained on vast amounts of data from the internet, which can include both accurate and inaccurate information. This training method makes it challenging for the AI to always provide correct information, especially on complex topics like elections.

- Previous Issues with AI Chatbots: AI chatbots have faced scrutiny before for generating misleading or biased content. For example, Microsoft’s Tay chatbot, launched in 2016, had to be taken offline after it started producing offensive and racist content within hours of its release.

- Response from X and the Broader Social Media Industry

- X’s Previous Actions Against Misinformation: Before the acquisition by Musk, Twitter had implemented several measures to combat misinformation, such as flagging disputed tweets, reducing the visibility of misleading content, and partnering with fact-checkers. Since the rebranding to X, some of these measures have been rolled back or modified.

- Industry-Wide Challenges: X is not alone in struggling with misinformation. Platforms like Facebook, YouTube, and TikTok have also faced criticism for their roles in spreading false information. These companies have been exploring various strategies to improve their AI moderation tools and increase transparency in their content algorithms.

- Political and Legal Implications

- Potential Legal Repercussions: If misinformation continues to spread unchecked, X could face legal challenges, particularly from regulatory bodies that oversee election integrity. In the U.S., the Federal Election Commission (FEC) and other governmental organizations may take action if platforms are found to be interfering with the electoral process.

- Political Reactions: Both political parties in the U.S. have expressed concerns about the role of social media in elections. While Democrats often focus on the spread of misinformation and its impact on voter suppression, Republicans have raised concerns about censorship and the suppression of conservative viewpoints.

- Future of AI in Social Media

- Long-Term Solutions for Misinformation: The ongoing development of AI tools that can accurately distinguish between true and false information is crucial for the future of social media platforms. This includes better training data, more robust fact-checking mechanisms, and improved user reporting features.

- Ethical Considerations: As AI becomes more integrated into social media platforms, there is a growing need for ethical guidelines to ensure that these tools are used responsibly. This includes addressing issues of bias, transparency, and the potential for AI to be used in malicious ways.

- Public and Expert Reactions

- Public Opinion: Users have expressed mixed reactions to the changes on X, with some praising the platform’s efforts to provide accurate information, while others remain skeptical of the platform’s ability to manage misinformation effectively.

- Expert Commentary: Experts in AI and digital ethics have weighed in on the issue, discussing the challenges of training AI to navigate complex and sensitive topics like elections. They emphasize the importance of continuous oversight and updates to AI systems to prevent the spread of harmful content.