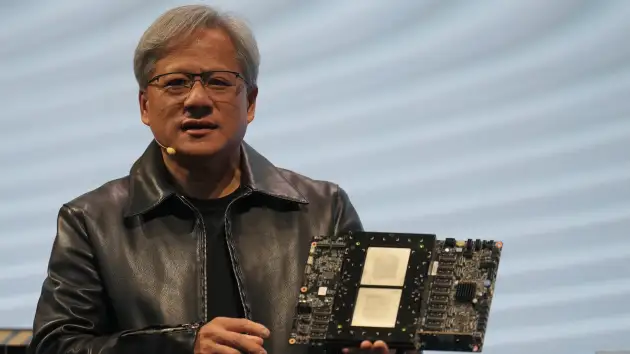

Nvidia

on Monday revealed the H200, an illustrations handling unit intended for preparing and sending the sorts of computerized reasoning models that are controlling the generative man-made intelligence blast.

The new GPU is an overhaul from the H100, the chip OpenAI used to prepare its most exceptional huge language model, GPT-4. Huge organizations, new companies and government offices are competing for a restricted stock of the chips.

H100 chips cost somewhere in the range of $25,000 and $40,000, as per a gauge from Raymond James, and large number of them cooperating are expected to make the greatest models in a cycle called “preparing.”

Fervor over Nvidia’s computer based intelligence GPUs has supercharged the organization’s stock, which is up over 230% such a long ways in 2023. Nvidia expects around $16 billion of income for its monetary second from last quarter, up 170% from a year prior.

The vital improvement with the H200 is that it incorporates 141GB of future “HBM3” memory that will assist the chip with performing “surmising,” or utilizing an enormous model after producing text, pictures or predictions is prepared.

Nvidia said the H200 will create yield almost two times as quick as the H100. That depends on a test utilizing Meta’s

Llama 2 LLM.

The H200, as most would consider to be normal to send in the second quarter of 2024, will rival AMD’s MI300X GPU. AMD’s

chip, like the H200, has extra memory over its ancestors, which helps fit huge models on the equipment to run derivation.

Nvidia said the H200 will be viable with the H100, implying that man-made intelligence organizations who are as of now preparing with the earlier model won’t have to change their server frameworks or programming to utilize the new adaptation.

Nvidia says it will be accessible in four-GPU or eight-GPU server designs on the organization’s HGX finished frameworks, as well as in a chip called GH200, which coordinates the H200 GPU with an Arm-based processor.

In any case, the H200 may not hold the crown of the quickest Nvidia simulated intelligence chip for a really long time.

While organizations like Nvidia offer various setups of their chips, new semiconductors frequently move forward about at regular intervals, when makers move to an alternate engineering that opens more critical execution gains than adding memory or other more modest improvements. Both the H100 and H200 depend on Nvidia’s Container design.

In October, Nvidia let financial backers know that it would move from a two-year design rhythm to a one-year discharge design because of popularity for its GPUs. The organization showed a slide recommending it will declare and deliver its B100 chip, in view of the impending Blackwell design, in 2024.